监督学习

监督学习的目标是建立一个学习过程,将预测结果与“训练数据”(即输入数据)的实际结果进行比较,不断的调整预测模型,直到模型的预测结果达到一个预期的准确率,包括分类、回归等问题。而常用算法包括线性回归、决策树、贝叶斯分类、最小二乘回归、逻辑回归、支持向量机、神经网络等。

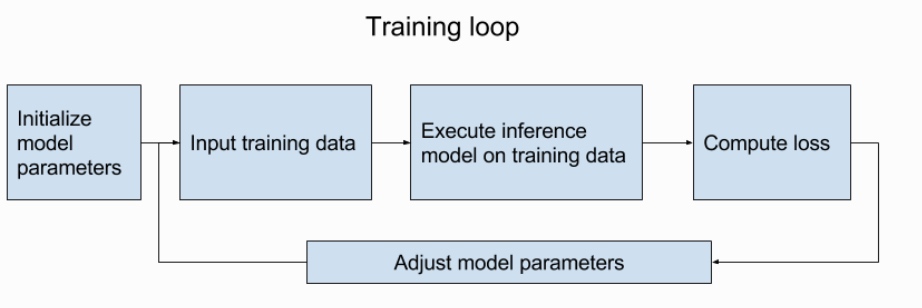

监督学习的整个训练流程如下图所示

监督学习的目标是建立一个学习过程,将预测结果与“训练数据”(即输入数据)的实际结果进行比较,不断的调整预测模型,直到模型的预测结果达到一个预期的准确率,包括分类、回归等问题。而常用算法包括线性回归、决策树、贝叶斯分类、最小二乘回归、逻辑回归、支持向量机、神经网络等。

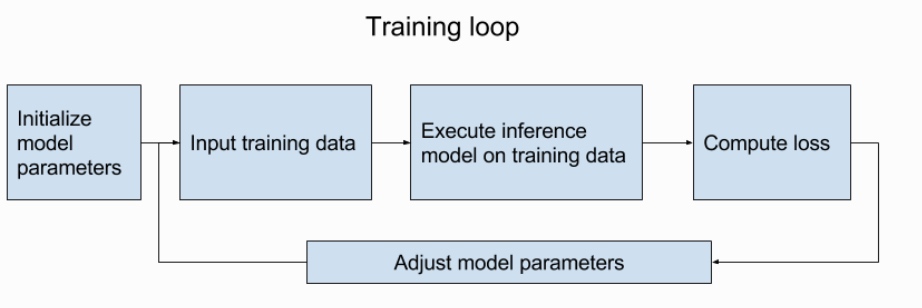

监督学习的整个训练流程如下图所示